Opsview 6.8.x End of Support

With the release of Opsview 6.11.0 on February 2025, versions 6.8.x have reached their End of Support (EOS) status, according to our Support policy. This means that versions 6.8.x will no longer receive code fixes or security updates.

The documentation for version 6.8.9 and earlier versions will remain accessible for the time being, but it will no longer be updated or receive backports. We strongly recommend upgrading to the latest version of Opsview to ensure continued support and access to the latest features and security enhancements.

Migrate to new hardware Virtual Appliance

Overview Copied

This document describes the steps required to migrate an existing Opsview Monitor 5.4.2 Virtual Appliance (VA) system to Opsview Monitor 6.3.x VA. Existing Opsview 5.4 slaves will be re-purposed to be Opsview 6.3 collectors.

We will be using Opsview Deploy to setup and configure the new environment.

New, randomly generated credentials will be used on the new system. However, the security wallet key will be migrated so existing secure data will be used.

Through the process described here, the Opsview 5.4.2 slaves will be upgraded into 6.3 Collectors. The upgraded Collectors will no longer be able to communicate with the old 5.4.2 master. If you would like to have the two systems running in parallel, please provision new servers for the purpose of acting as the new collectors.

Note

Databases must use the default names, ie: opsview, runtime, odw, dashboard.

Summary of process Copied

- Pre-migration validation - to check for known issues that can impact the migration.

- Deploy new 6.3.x VA system.

- Move opsview_core_secure_wallet_key_hash: ‘KEY’ from old server to new server.

- Migrate data from old database to new database server.

- Migrate data from old timeseries server (InfluxDB or RRD) to new timeseries server.

- Convert old slaves to new collectors.

- Apply changes and start monitoring in 6.3.

- Other migration tasks.

In this document, we will use the following hostnames:

| Purpose | Old host name | New host name |

|---|---|---|

| Master (5.4) / Orchestrator (6.3) server | oldmasterserver | newmasterserver |

| Slave (5.4) / Collector (6.3) server | oldslaveserver | newcollectorserver |

There will be an outage to monitoring while this migration is in progress.

Summary of differences Copied

The main differences are:

- Opsview Deploy will be used to manage the entire, distributed Opsview system.

- The file system location has changed from

/usr/local/nagiosand/usr/local/opsview-webto/opt/opsview/coreutilsand/opt/opsview/webapprespectively. - All files are owned by root, readable by opsview user and run as opsview user.

- Slaves will become Collectors.

- New, randomly generated credentials will be used for database connections.

Prerequisites Copied

Activation key Copied

Ensure you have an activation key for your new system - contact Opsview Support if you have any issues.

Hardware Copied

As existing 5.4 slaves will be converted to be 6.3 collectors, ensure they meet minimum hardware specs for collectors.

Check Hardware Requirements when sizing your new Opsview 6.3 Collectors

Opsview Deploy Copied

Network Connectivity Copied

Due to the new architecture, different network ports will be required from Orchestrator to Collectors. Ensure these are setup before continuing as documented on Managing Collector Servers.

During this process, you will need the following network connectivity:

Please read the List of Ports used by Opsview Monitor.

| Source | Destination | Port | Reason |

|---|---|---|---|

| newmasterserver | oldslaveservers | SSH | For Opsview Deploy to setup collectors |

| newmasterserver, newslaveservers | downloads.opsview.com | HTTPS | For installing Opsview and third party packages |

| oldmasterserver | newmasterserver | SSH | For migrating data |

| oldslaveservers | newslaveservers | SSH | For migrating Flow data |

SNMP configuration Copied

As 5.4 slaves will be converted to 6.3 collectors and SNMP configuration will be managed by Opsview Deploy, backup your existing SNMP configuration so that you can refer back to it. See SNMP Configuration further down this page for the list of files to backup.

Pre-migration validation Copied

Opsview Monitor makes an assumption about which host within the database is the Master server. To confirm all is okay before you begin the migration, run the following command as the nagios user on oldmasterserver.

/usr/local/nagios/utils/cx opsview "select hosts.name,ip,alias,monitoringservers.name as monitoring_server from hosts,monitoringservers where monitoringservers.host=hosts.id and monitoringservers.id=1 and hosts.id=1"

You should get back output similar to the following:

+---------+-----------+-----------------------+--------------------------+

| name | ip | alias | monitoring_server |

+---------+-----------+-----------------------+--------------------------+

| opsview | localhost | Opsview Master Server | Master Monitoring Server |

+---------+-----------+-----------------------+--------------------------+

Disable the 5.x repositories Copied

To prevent a conflict with older versions of packages, please ensure you have disabled or removed all Opsview repository configuration for version 5.x before starting the 6.3 installation.

On Ubuntu and Debian, check for lines containing downloads.opsview.com in /etc/apt/sources.list and each file in /etc/apt/sources.list.d, and comment them out or remove the file.

On Centos, RHEL and OL, check for sections containing downloads.opsview.com in all files in /etc/yum.repos.d, and set enabled = 0 or remove the file.

Deploy New Opsview 6.3 Copied

On newmasterserver, follow instructions for configuring a new 6.3 system as per Virtual Appliance.

On the newmasterserver, to keep your encrypted data from oldmasterserver, edit file /opt/opsview/deploy/etc/user_secrets.yml add the following line.

(Replace KEY with the contents of /usr/local/nagios/etc/sw.key from oldmasterserver. (The “_hash” is important))

opsview_core_secure_wallet_key_hash: 'KEY'

As root on newmasterserver, stop all services.

/opt/opsview/watchdog/bin/opsview-monit stop all

Remove existing security wallets keys

rm /opt/opsview/coreutils/etc/sw.key

rm /opt/opsview/coreutils/etc/sw/ms1.key

For the following files on oldmasterserver, copy across any configuration into Opsview Deploy on newmasterserver so your system can be setup similarly:

scp /usr/local/nagios/etc/opsview.conf USER@newmasterserver:/opt/opsview/coreutils/etc/opsview.conf

Any changes made to /usr/local/opsview-web/opsview_web_local.yml must be put into the deploy configuration files. For example, to set up LDAP or AD integration, see the LDAP configuration page.

On the newmasterserver edit file /opt/opsview/deploy/etc/user_vars-appliance.yml

Within this file add the following lines. Change the purchased modules you have currently installed on your oldmasterserver from False to True.

opsview_module_servicedesk_connector: False

opsview_module_reporting: False

opsview_module_netaudit: False

opsview_module_netflow: False

Remove any reference to opsview_software_key (either remove the line or put a # comment character at the start); an old software key can prevent activation working correctly:

# opsview_software_key:

On the newmasterserver run the following command to drop the Opsview databases:

echo "drop database opsview; drop database runtime; drop database notifications; drop database odw; drop database jasperserver; drop database dashboard" | mysql -u root -p`cat /opt/opsview/deploy/etc/user_secrets.yml |grep opsview_database_root_password | awk '{print $2 }'`

The following command should be run on the newmasterserver, this will remove some of the existing Opsview packages (ignore any warnings).

apt-get remove --purge opsview-core-utils

Now run the following commands (each command should complete successful before moving onto the next):

cd /opt/opsview/deploy

./bin/opsview-deploy -vvv lib/playbooks/setup-hosts.yml

/opt/opsview/watchdog/bin/opsview-monit stop all

./bin/opsview-deploy -vvv lib/playbooks/setup-infrastructure.yml

./bin/opsview-deploy -vvv lib/playbooks/setup-opsview.yml

Start each process and confirm they all start:

/opt/opsview/watchdog/bin/opsview-monit stop all

/opt/opsview/watchdog/bin/opsview-monit start all

watch /opt/opsview/watchdog/bin/opsview-monit summary -B

Migrate existing database to new database server Copied

As the root user on oldmasterserver:

/opt/opsview/watchdog/bin/opsview-monit stop all

watch /opt/opsview/watchdog/bin/opsview-monit summary -B

Wait till all processes are “Not monitored”.

Then, take a backup of all your databases on oldmasterserver, make sure to include any extra databases you may have (for example, include jasperserver if it exists). This will create a full database export.(update PASSWORD based on the mysql root user’s password):

mysqldump -u root -pPASSWORD --default-character-set=utf8mb4 --add-drop-database --extended-insert --opt --databases opsview runtime odw dashboard notifications jasperserver | sed 's/character_set_client = utf8 /character_set_client = utf8mb4 /' | gzip -c > /tmp/databases.sql.gz

Note

Backing up the system can take some time, depending on the size of your databases.

On oldmasterserver, copy over the database dump to the newmasterserve using such tools as scp:

scp /tmp/databases.sql.gz USER@newmasterserver:/tmp

Then on newmasterserver, run as the root user:

echo "drop database opsview; drop database runtime; drop database notifications; drop database odw; drop database jasperserver; drop database dashboard" | mysql -u root -p`cat /opt/opsview/deploy/etc/user_secrets.yml |grep opsview_database_root_password | awk '{print $2 }'`

# NOTE: this step can take some time to complete, depending on the size of your databases

# NOTE: If you get an error 'MySQL server has gone away' you may need to set 'max_allowed_packet=32M' in MySQL or MariaDB configuration

( echo "SET FOREIGN_KEY_CHECKS=0;"; zcat /tmp/databases.sql.gz ) | mysql -u root -p`cat /opt/opsview/deploy/etc/user_secrets.yml |grep opsview_database_root_password | awk '{print $2 }'`

On newmasterserver, run as the root user:

/opt/opsview/watchdog/bin/opsview-monit stop all

/opt/opsview/watchdog/bin/opsview-monit start opsview-loadbalancer

On newmasterserver, run as the opsview user:

/opt/opsview/coreutils/installer/upgradedb.pl

/opt/opsview/watchdog/bin/opsview-monit start all

Within the Opsview UI you should now see all of your hosts from your oldmasterserver.

Migrate Timeseries data to new Timeseries server Copied

The next instructions depend on which technology your current Timeseries Server is based on, either InfluxDB or RRD.

RRD based graphing data Copied

If you use RRD, transfer the /usr/local/nagios/installer/rrd_converter script to /tmp on the oldmasterserver, then as root, run:

cd /tmp

chmod u+x /tmp/rrd_converter

/tmp/rrd_converter -y export

This will produce a rrd_converter.tar.gz file in /tmp.

To transfer this file to the newmasterserver, run the following (after updating USER and newmasterserver appropriately):

# If using RRD graphing

scp /tmp/rrd_converter.tar.gz USER@newmasterserver:/tmp

Then on newmasterserver as root, run:

cd /tmp

/opt/opsview/watchdog/bin/opsview-monit stop opsview-timeseriesrrdupdates

/opt/opsview/watchdog/bin/opsview-monit stop opsview-timeseriesrrdqueries

/opt/opsview/watchdog/bin/opsview-monit stop opsview-timeseriesenqueuer

/opt/opsview/watchdog/bin/opsview-monit stop opsview-timeseries

rm -rf /opt/opsview/timeseriesrrd/var/data/*

/opt/opsview/coreutils/installer/rrd_converter -y import /tmp/rrd_converter.tar.gz

chown -R opsview:opsview /opt/opsview/timeseriesrrd/var/data

sudo -u opsview -i -- bash -c 'export PATH=$PATH:/opt/opsview/local/bin ; /opt/opsview/timeseriesrrd/installer/migrate-uoms.pl /opt/opsview/timeseriesrrd/var/data/'

/opt/opsview/watchdog/bin/opsview-monit start opsview-timeseriesrrdupdates

/opt/opsview/watchdog/bin/opsview-monit start opsview-timeseriesrrdqueries

/opt/opsview/watchdog/bin/opsview-monit start opsview-timeseriesenqueuer

/opt/opsview/watchdog/bin/opsview-monit start opsview-timeseries

InfluxDB based graphing data Copied

If you use InfluxDB, on oldmasterserver as root, run the following commands to backup InfluxDB data:

influxd backup -portable /tmp/influxdb_backup

cp /opt/opsview/timeseriesinfluxdb/var/data/+metadata* /tmp/influxdb_backup/

cd /tmp

tar -zcvf /tmp/influxdb_backup.tar.gz influxdb_backup/

Then run the following (after updating USER and newmasterserver appropriately):

scp /tmp/influxdb_backup.tar.gz USER@newmasterserver:/tmp

On newmasterserver as root, stop all timeseries daemons:

/opt/opsview/watchdog/bin/opsview-monit stop opsview-timeseriesinfluxdbqueries

/opt/opsview/watchdog/bin/opsview-monit stop opsview-timeseriesinfluxdbupdates

Then on newmasterserver, you need to install InfluxDB. Follow instructions at InfluxDB installation. InfluxDB should be running at the end of this.

Import the new data:

curl -i -XPOST http://127.0.0.1:8086/query --data-urlencode "q=DROP DATABASE opsview"

cd /tmp

tar -zxvf /tmp/influxdb_backup.tar.gz

# You can overwrite the existing +metadata* files

cp /tmp/influxdb_backup/+metadata* /opt/opsview/timeseriesinfluxdb/var/data

chown -R opsview:opsview /opt/opsview/timeseriesinfluxdb/var/data

influxd restore -portable /tmp/influxdb_backup/

Note

If you get a message about skipping the “_internal” database, this is normal and can be ignored. See example below.

[root@newmasterserver tmp]# influxd restore -portable /tmp/influxdb_backup/

2018/11/25 13:40:02 Restoring shard 3 live from backup 20181125T133300Z.s3.tar.gz

2018/11/25 13:40:02 Restoring shard 4 live from backup 20181125T133300Z.s4.tar.gz

2018/11/25 13:40:02 Restoring shard 2 live from backup 20181125T133300Z.s2.tar.gz

2018/11/25 13:40:02 Meta info not found for shard 1 on database _internal. Skipping shard file 20181125T133300Z.s1.tar.gz

Finally, restart timeseries daemons:

/opt/opsview/watchdog/bin/opsview-monit start opsview-timeseriesinfluxdbqueries

/opt/opsview/watchdog/bin/opsview-monit start opsview-timeseriesinfluxdbupdates

If you do not have any collectors in your system for this migration then after the below Opspack step, you may now start Opsview

sudo su - opsview

opsview_watchdog all start

Convert old slaves to new collectors Copied

On newmasterserver as root, update the opsview_deploy.yml file with the new collectors. For example:

collector_clusters:

collectors-a:

collector_hosts:

ov-slavea1:

ip: 192.168.17.123

user: cloud-user

ov-slavea2:

ip: 192.168.17.84

user: cloud-user

collectors-b:

collector_hosts:

ov-slaveb1:

ip: 192.168.17.158

user: cloud-user

Then run opsview-deploy to set up these collectors:

./bin/opsview-deploy lib/playbooks/setup-everything.yml

# Need to run this to ensure all latest packages

./bin/opsview-deploy -t os-updates lib/playbooks/setup-hosts.yml

After this, your Opsview 5.4 Slaves will be converted to 6.3 Collectors and will be automatically added in the system and listed in the Unregistered Collectors grid.

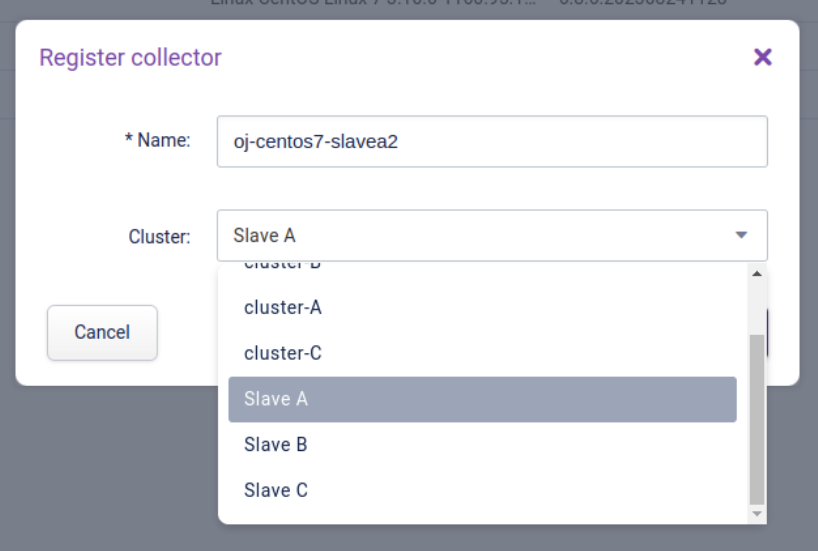

To assign the collectors to a cluster, in the web user interface, go to Configuration > Collector Management and switch to view Unregistered Collectors to view the collectors:

You can then register them to the existing clusters:

You may need to remove your previous hosts defined in Opsview that were your old slaves.

Temporarily turn off notifications Copied

There will likely be some configuration changes that need to be made to get all of the migrated service checks working, so notifications should be silenced temporarily to avoid spamming all your users.

In the web user interface, go to Configuration > My System > Options and set Notifications to Disabled.

Apply changes and start monitoring Copied

In the web user interface, go to Configuration > System > Apply Changes.

This will start monitoring with the migrated configuration database.

Other data to copy Copied

Environmental modifications Copied

If your Opsview 5 master server has any special setup for monitoring, these will need to be setup on your new collectors. This could include things like:

- Dependency libraries required for monitoring (eg: Oracle client libraries, VMware SDK)

- SSH keys used by check_by_ssh to remote hosts

- Firewall configurations to allow master or slave to connect to remote hosts

- Locally installed plugins that are not shipped in the product (see below for more details)

Opsview web UI - Dashboard process Maps Copied

If you have any Dashboard Process Maps, these need to be transferred and moved to the new location.

On oldmasterserver as the nagios user:

cd /usr/local/nagios/var/dashboard/uploads

tar -cvf /tmp/dashboard.tar.gz --gzip *.png

scp /tmp/dashboard.tar.gz USER@newmasterserver:/tmp

On newmasterserver as the opsview user:

cd /opt/opsview/webapp/docroot/uploads/dashboard

tar -xvf /tmp/dashboard.tar.gz

Test by checking the process maps in dashboard are loaded correctly.

Note

If you have seen a broken image before transferring the files over, it may be cached by the browser. You may need to clear browser cache and reload the page.

Opsview Web UI - Host Template Icons Copied

If you have any Host Template icons, used by BSM components, these need to be transferred and moved to the new location.

On oldmasterserver as the nagios user:

cd /usr/local/nagios/share/images/hticons/

tar -cvf /tmp/hticons.tar.gz --gzip [1-9]*

scp /tmp/hticons.tar.gz USER@newmasterserver:/tmp

On newmasterserver as the opsview user:

cd /opt/opsview/webapp/docroot/uploads/hticons

tar -xvf /tmp/hticons.tar.gz

Test by checking the Host Templates list page, BSM View or BSM dashlets

Opsview Autodiscovery log files Copied

If you have historical Autodiscovery log files that you wish to retain, these need to be transferred and moved to the new location.

On oldmasterserver as the nagios user:

cd /usr/local/nagios/var/discovery/

tar -cvf /tmp/autodiscovery.tar.gz --gzip *

scp /tmp/autodiscovery.tar.gz USER@newmasterserver:/tmp

On newmasterserver as the opsview user:

cd /opt/opsview/autodiscoverymanager/var/log/

tar -xvf /tmp/autodiscovery.tar.gz

RSS/Atom files Copied

If you use the RSS Notification Method and want to retain the existing notifications, you will need to transfer them and move to the right location.

On oldmasterserver as the nagios user:

cd /usr/local/nagios/atom

tar -cvf /tmp/atom.tar.gz --gzip *.store

scp /tmp/atom.tar.gz USER@newmasterserver:/tmp

On newserver as the opsview user:

cd /opt/opsview/monitoringscripts/var/atom

tar -xvf /tmp/atom.tar.gz

Opsview Monitoring plugins Copied

Any custom plugins, event handlers or notification scripts will need to be reuploaded:

| Type | Old location | Instructions |

|---|---|---|

| Plugins | /usr/local/nagios/libexec | Importing Plugins |

| Event Handlers | /usr/local/nagios/libexec/eventhandlers | Importing Event Handlers |

| Notification Scripts | /usr/local/nagios/libexec/notifications | Importing Notification Scripts |

You can use this script to check that all plugins, event handlers and notification scripts recorded in the database exist in the right place on the filesystem.

LDAP syncing Copied

If you use opsview_sync_ldap, copy from oldmasterserver the file /usr/local/nagios/etc/ldap to newmasterserver at /opt/opsview/coreutils/etc/ldap. Ensure files are owned by opsview user.

Opsview configuration database Copied

We do not change your Opsview master configuration because you may have custom configuration changes. However, the old Opsview 5.X Host Templates are no longer relevant for Opsview 6.3, so you will need to manually remove the following Host Templates:

- Application - Opsview BSM

- Application - Opsview Common

- Application - Opsview Master

- Application - Opsview NetFlow Common

- Application - Opsview NetFlow Master

You will need to add the following Host Templates manually to the the hosts where those Opsview Components have been added (usually the Orchestrator server):

- Opsview - Component - Agent

- Opsview - Component - Autodiscovery Manager

- Opsview - Component - BSM

- Opsview - Component - DataStore

- Opsview - Component - Downtime Manager

- Opsview - Component - Executor

- Opsview - Component - Flow Collector

- Opsview - Component - Freshness Checker

- Opsview - Component - License Manager

- Opsview - Component - Load Balancer

- Opsview - Component - Machine Stats

- Opsview - Component - MessageQueue

- Opsview - Component - Notification Center

- Opsview - Component - Orchestrator

- Opsview - Component - Registry

- Opsview - Component - Results Dispatcher

- Opsview - Component - Results Flow

- Opsview - Component - Results Forwarder

- Opsview - Component - Results Live

- Opsview - Component - Results Performance

- Opsview - Component - Results Recent

- Opsview - Component - Results Sender

- Opsview - Component - Results SNMP

- Opsview - Component - Scheduler

- Opsview - Component - SNMP Traps

- Opsview - Component - SNMP Traps Collector

- Opsview - Component - SSH Tunnels

- Opsview - Component - State Changes

- Opsview - Component - TimeSeries

- Opsview - Component - TimeSeries Enqueuer

- Opsview - Component - Timeseries InfluxDB

- Opsview - Component - TimeSeries RRD

- Opsview - Component - Watchdog

- Opsview - Component - Web

NetAudit Copied

On oldmasterserver as the opsview user:

cd /var/opt/opsview/repository/

tar -cvf /tmp/netaudit.tar.gz --gzip rancid/

scp /tmp/netaudit.tar.gz USER@newmasterserver:/tmp

On newmasterserver as the opsview user:

cd /opt/opsview/netaudit/var/repository/

rm -fr rancid

tar -xvf /tmp/netaudit.tar.gz

cd /opt/opsview/netaudit/var

rm -fr svn

svn checkout file:///opt/opsview/netaudit/var/repository/rancid svn

Test by looking at the history of the NetAudit hosts.

Test when a change is made on the router.

Reporting Module Copied

On newmasterserver as root user, stop Reporting Module:

/opt/opsview/watchdog/bin/opsview-monit stop opsview-reportingmodule

On oldmasterserver, take a backup of your jasperserver database and transfer to newmasterserver server:

mysqldump -u root -pPASSWORD --default-character-set=utf8mb4 --add-drop-database --extended-insert --opt --databases jasperserver | sed 's/character_set_client = utf8 /character_set_client = utf8mb4 /' | gzip -c > /tmp/reporting.sql.gz

scp /tmp/reporting.sql.gz USER@newmasterserver:/tmp

On newmasterserver, restore the database:

echo "drop database jasperserver" | mysql -u root -pPASSWORD

( echo "SET FOREIGN_KEY_CHECKS=0;"; zcat /tmp/reporting.sql.gz ) | mysql -u root -pPASSWORD

On newmasterserver as root user, run the upgrade and start the Reporting Module:

/opt/opsview/jasper/installer/postinstall_root

/opt/opsview/watchdog/bin/opsview-monit start opsview-reportingmodule

In the Reporting Module UI, you will need to reconfigure the ODW datasource connection, as it should now point to newmasterserver.

Test with a few reports.

Network Analyzer Copied

For each Flow Collector in 5.4 (which can be the master or any slave nodes), you will need to copy the historical data. Instructions below for the master, but similar steps will need to be done for each old slave/new collector.

For the master, on oldmasterserver as root user, run:

cd /var/opt/opsview/netflow/data

tar -cvf /tmp/netflow.tar.gz --gzip *

scp /tmp/netflow.tar.gz USER@newmasterserver:/tmp

On newmasterserver as root user, run:

cd /opt/opsview/flowcollector/var/data/

tar -xvf /tmp/netflow.tar.gz

chown -R opsview.opsview .

Network devices will need to be reconfigured to send their Flow data to the new master and/or collectors.

Service Desk Connector Copied

Due to path and user changes, will need to manually configure service desk configurations. See Service Desk Connector documentation.

SMS Module Copied

This is not supported out of the box in this version of the product. Please contact the Opsview Customer Success Team.

SNMP Trap MIBS Copied

If you have any specific MIBs for translating incoming SNMP Traps, these need to exist in the new location for every snmptrapscollector in each cluster that is monitoring incoming traps. Note that in distributed system this will be on every collector and the new master. For the collectors this will happen automatically as part of the stage “Convert Old Slaves to New Collectors”, however for the master a manual step will be needed if you want to monitor incoming traps from hosts residing in the Master Monitoring cluster.

On oldmasterserver as the nagios user:

# copy over any MIBs and subdirectories (excluding symlinks)

cd /usr/local/nagios/snmp/load/

find -maxdepth 1 -mindepth 1 -not -type l -print0 | tar --null --files-from - -cvf /tmp/custom-mibs.tar.gz --gzip

scp /tmp/custom-mibs.tar.gz USER@newmasterserver:/tmp

On newmasterserver as the opsview user:

# unpack and copy the extra MIBs

cd /opt/opsview/snmptraps/var/load/

tar -xvf /tmp/custom-mibs.tar.gz

# now become the root user and run the following command

/opt/opsview/watchdog/bin/opsview-monit restart opsview-snmptrapscollector

Test by sending a trap to the master from a host that it is in its cluster and check that it arrives as a result for the host in the Navigator screen. You can also add the “SNMP Trap - Alert on any trap” service check to the host if it has not got any trap handlers. With the service check added to the host, you can use SNMP Tracing to capture and read any trap that is getting sent from that host.

SNMP Polling MIBS Copied

If you have any specific MIBs for translating OIDs for check_snmp plugin executions, these need to exist in the /usr/share/snmp/mibs/ or /usr/share/mibs/ location for the orchestrator to use in the newmasterserver. All OIDs specified in opsview of the form of “

On oldmasterserver as the root user:

# copy over any /usr/share MIBs and subdirectories (excluding symlinks)

cd /usr/share/snmp/mibs #[DEBIAN,UBUNTU]

find -maxdepth 1 -mindepth 1 -not -type l -print0 | tar --null --files-from - -cvf /tmp/share-snmp-mibs.tar.gz --gzip

scp /tmp/share-snmp-mibs.tar.gz USER@newmasterserver:/tmp

cd /usr/share/mibs #[CENTOS,RHEL,OL]

find -maxdepth 1 -mindepth 1 -not -type l -print0 | tar --null --files-from - -cvf /tmp/share-mibs.tar.gz --gzip

scp /tmp/share-mibs.tar.gz USER@newmasterserver:/tmp

# copy over any custom MIBs and subdirectories (excluding symlinks)

cd /usr/local/nagios/snmp/load/

find -maxdepth 1 -mindepth 1 -not -type l -print0 | tar --null --files-from - -cvf /tmp/custom-mibs.tar.gz --gzip

scp /tmp/custom-mibs.tar.gz USER@newmasterserver:/tmp

On newmasterserver as the root user:

# install mib package for Debian/Ubuntu

apt-get install snmp-mibs-downloader

# Note: At this point you should also install any other proprietary MIB packages necessary for translating MIBs used for SNMP Polling in your system

e.g. apt-get install {{your-MIB-Packages}}

e.g. yum install {{your-MIB-Packages}}

# unpack and copy the extra MIBs

cd /usr/share/snmp/mibs #[DEBIAN,UBUNTU]

tar -xvf /tmp/share-snmp-mibs.tar.gz

mkdir opsview && cd opsview && tar -xvf /tmp/custom-mibs.tar.gz

cd /usr/share/mibs #[CENTOS,RHEL,OL]

tar -xvf /tmp/share-mibs.tar.gz

mkdir opsview && cd opsview && tar -xvf /tmp/custom-mibs.tar.gz

SNMP configuration Copied

Ansible will setup default configuration for SNMP on the newmasterserver and all collectors. It overwrites the following files:

- /etc/default/snmpd #[Debi,Ubun]

- /etc/snmp/snmpd.conf #[Debi,Ubun]

- /etc/snmp/snmptrapd.conf #[Debi,Ubun]

- /etc/sysconfig/snmpd #[Cent,RHEL,OL]

- /etc/sysconfig/snmptrapd #[Cent,RHEL,OL]

- /etc/snmp/snmpd.conf #[Cent,RHEL,OL]

Edit these files and merge any custom changes you need for your environment.

Note

As these files are managed by Opsview Deploy, they will be overwritten the next timeopsview-deployis run, so you will need to merge any changes back in again.